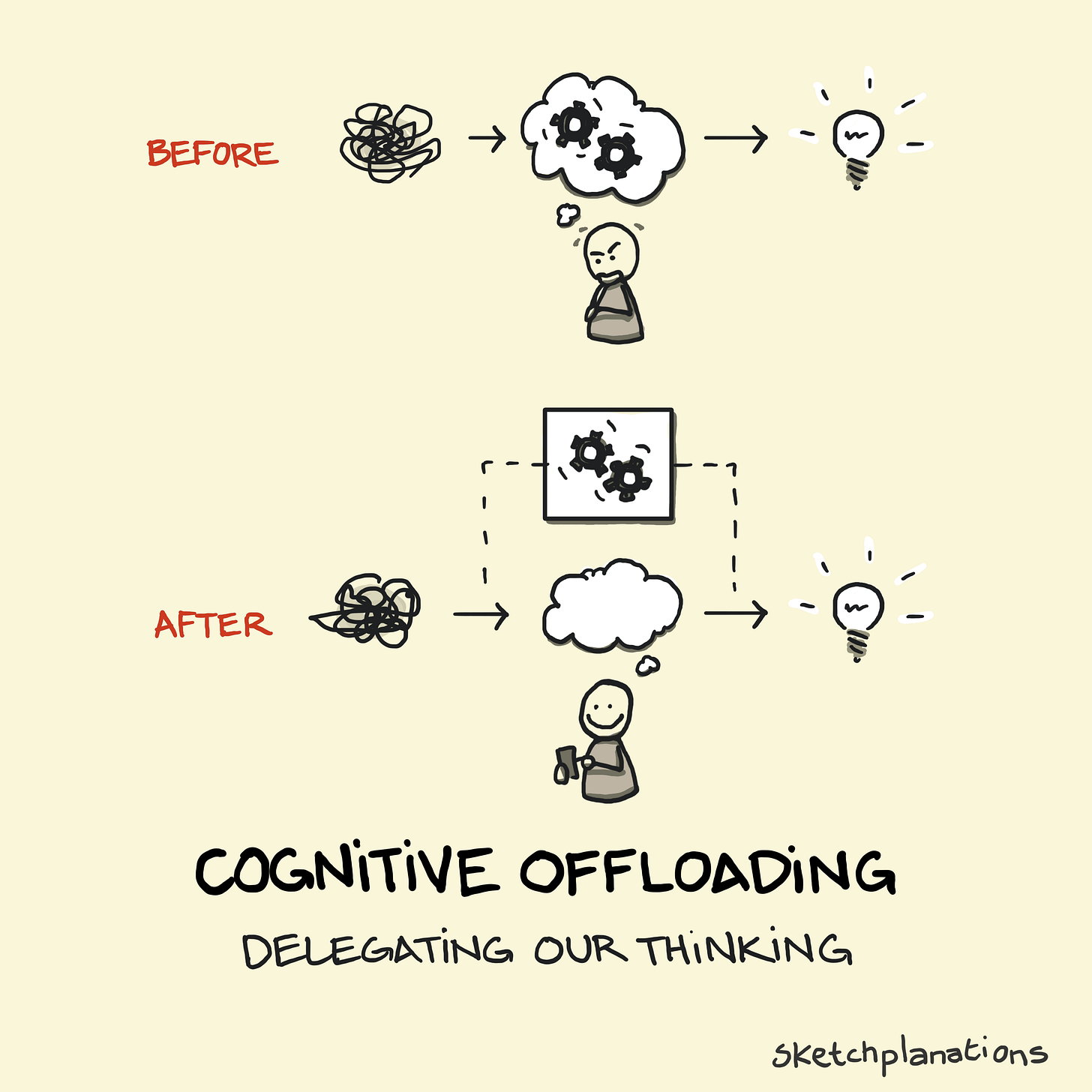

Cognitive Offloading

Delegating our thinking

3 quick things before diving into a topic that’s been on my mind a lot lately:

I have a new piece of piano music out today, called Blossom. I hope you like it: 🎧 Have a listen

The Zanclean Megaflood was spectacular and filled up the Mediterranean. We talked about the sketch and the event with the hilarious and knowledgeable (a great combination) Mike Sowden of Everything is Amazing on the podcast: Listen to the podcast

You may spot that this newsletter is now on Substack. If you’ve enjoyed it over the years and fancy hearing more from me and supporting the work, you can upgrade to paid. I’m still setting up. Any tips or fixes (dark mode?), just let me know.

To business:

I've often found myself wondering how thinking is hard work. If someone gives me a long list of arithmetic to do, it's tempting not to do it. If I have a tricky problem, it's easy to procrastinate and do "easier" stuff instead. Deep reflective thinking, and even shallow thinking that requires holding information in memory, seems like work—ever been tempted to delegate the adding up of scores for a board game?

I've never quite figured out where the hard work is in thinking—after all, it's not physically tiring like heading out for a 5-mile run—but it's definitely there. Hence, perhaps the tempting and irresistible rise of cognitive offloading: delegating our thinking tasks so we don't have to do them.

We've been cognitive offloading for a long time. When I carried the 2 in a long sum, I was offloading a task for my memory. And I grew up remembering phone numbers rather than dialling a person, and I don't feel the change has made things worse.

Hiring employees can be a form of delegating thinking. Using a calculator rather than a slide rule or working something out on paper leaves more room for deeper thinking. Other common examples of cognitive offloading include using notes apps, reminders, calculators, navigation systems, and now AI chatbots.

But what about when we can offload the deeper thinking, too, as we can now with AI chatbots? That may leave room for more interesting tasks. Or maybe, like not training my muscles and endurance by going out for a 5-mile run, it reduces my ability for deep thinking.

Nicholas Carr discussed a shift to shallow thinking from the pervasive use of the internet in his 2011 book The Shallows. We became proficient at skimming and scanning for answers rather than interrogating and questioning. There's some evidence that more cognitive offloading reduces our critical thinking abilities, but no doubt the jury's still out on what the final effects will be.

If a developer uses an AI chatbot in an interview and gives a strong answer, will that make them worse at the job when they would use an AI chatbot on the job anyway?

I use ChatGPT as a thinking partner for posts these days. I think that it helps make them better, but who knows what it's really doing to me =)

Are Sketchplanations just staying surface-level or engaging some thinking? It would be nice to think that it grinds a few gears. Here's hoping!

Related Ideas to Cognitive Offloading

Looking for something for Father’s Day coming up? Dare I say it, Big Ideas Little Pictures could be just the ticket.

1. My blood pressure is a lot lower after having listened to Blossom (twice in a row). Egads, is there anything you can't do? Stop raising the bar like this, for the sake of the rest of us.

2. SO MUCH FUN. Thank you for the opportunity, and hope I can repay it soon.

3. The only actual Substack rule I know of is: there are no rules, so feel free to be weird and break stuff wildly in all directions. But since there are no rules, that's not a rule, so at this point I would like to vanish in a puff of self-thwarted logic. Thank you.

*****

Re. Cognitive Offloading and the role of AI:

Thank you for raising this one in a sketch. Oh boy. I have so many thoughts swirling around this right now, and yet for me the whole thing feels like trying to nail jelly to the ceiling, because there are so many smart people writing about this and I need to put time aside to understand them...

My biases at work: my creative process is messy and semi-analogue (I have to write notes on a topic on my Remarkable 2 tablet, physically writing them with a pen, in order to really feel I'm getting a grip on them - but I also use my phone and laptop and so on) and while I've tinkered with various AI services, I currently use none of them to do my work because I enjoy the process of stumbling over things myself - and I'm wary of automating and farming out a process that is teaching me to be better at my job *and* is a lot of fun. (Plus, I have enough ego that I want the sense of satisfaction from having done the job myself in a way that gives me enough self-confidence to do my marketing around it.)

Beyond the ethical arguments around AI tools right now - which I think usually boil down to ethical question-marks hanging over the humans behind them and the ways they use them, eg. AI replacing experienced employees, the copyright battle between the UK Commons & Lords right now, etc. - well, I can maybe see myself using AI at some point. But one analogy sticks in my mind...

I used to play a lot of squash. Great fun, great for my fitness levels, and the closest I've ever got to becoming obsessed with a sport. (I'd love to get my fitness back to somewhere near that level, and that's a goal for myself in coming years, but - later.)

The very first time I played squash, I was 100% knackered at the end. And I remember thinking, "Oooh, I look forward to getting better at squash, so I won't be anywhere near as exhausted at the end of a match."

But this is not what happens! And it's probably not what *should* happen, as you get better at a skill. What really happens is you push yourself to your limit every time, and therefore spend all of your energy, and it's just that as you get better at doing it, your capacity increases and you achieve more. But it's no easier at the end. Or maybe only the tiniest bit. You're pretty much still as exhausted as you were as a noob - it's just that you played 5x or 10x better.

With AI, I see way too many folk saying "AI will make creativity easy". After which they'll post something that's technically impressive but creatively impoverished & narratively incoherent. It could be just the usual bout of cosplaying creative work that comes with every technical leap forward. But - if it's easy, and you're not creatively knackered at the end, have you really done anything *better*? Was your cognitive load as heavy as it could be, for your own good, in the same way heavier weights will make you stronger, but where "heavy" translates to "deeper thinking"?

I want to believe these tools can be used in truly great ways. But I think about how using a paper map can be such a different and enjoyable experience to using a digital map, and how modern generations increasingly don't know how to use them - https://www.independent.co.uk/news/uk/home-news/map-reading-millennials-smartphones-ordnance-survey-a8932976.html - and I wonder what's being invisibly lost, the same way that the thousands of years we've spent learning to handwrite have also taught us how to think - https://everythingisamazing.substack.com/p/why-it-might-not-sink-in-until-youve - and is the speed we're moving towards keyboards getting in the way of our thinking in that regard?

Such a huge topic.

(And of course I had to go leave you the longest comment in Substack's history - which I wrote with a keyboard, so it may be total gibberish. Hooray!)

The main risk I see is that the automated thinking partner aborts the thinking early on and reduces the range of imaginable solutions.

If the thinking partner provides a list of ideas, the urge to think of the whole problem space -- to build a more compresensive onthology -- can easily go missing.